FileMaker 13 introduces a new function that gives us some 30 different attributes about the data we store in a container field: GetContainerAttribute().

One of those attributes is the name of the file that we can ask for like this:

GetContainerAttribute ( theContainer ; "filename" )And another attribute is to see whether the file was inserted as a reference only.

GetContainerAttribute ( theContainer ; "storageType" )The response will be either:

- Embedded

- External (Secure),

- External (Open)

- File Reference

- Text

“Text” is when you get when the container’s content is not a file but some text that was inserted or pasted into the container field.

“External” indicates that the container field is set up for the “Remote Container” functionality.

Before FileMaker 12, when we wanted to keep the size of the FileMaker file down the only option was to store container data by reference only. That way the files were not stored in the file. The huge downside of that was that the path to the container files had to be the accessible from each client through OS-level file sharing. That setup was not always trivial, especially in mixed Windows/Mac environment. Backups were also complex since FileMaker Server only backed up the FileMaker file and not the referenced data.

That problem was solved in FileMaker 12 with Remote Containers. Through that feature FileMaker kept the container data outside of the FileMaker file but still managed backups and access to those files so that it worked from all clients without the need for fragile OS-level sharing.

But… there was one big caveat: the remote container folder had to be on the same drive as the FileMaker file, you could not choose your own location when you hosted the file on FileMaker Server.

Now with FileMaker 13, that issue has been solved and you can specify your own folder where that container data will be stored.

So what do you do if you have a solution that currently uses a lot of referenced container data and you want to switch over to the improved Remote Container functionality? Your only option really is to re-insert the referenced file as en embedded file. If the container field is set up for Remote storage, FileMaker will take care of that when you insert the data.

Fortunately, this does not have to be a manual process. Using the new attributes we can get from a container it is very easy to script it. Below is an example of how.

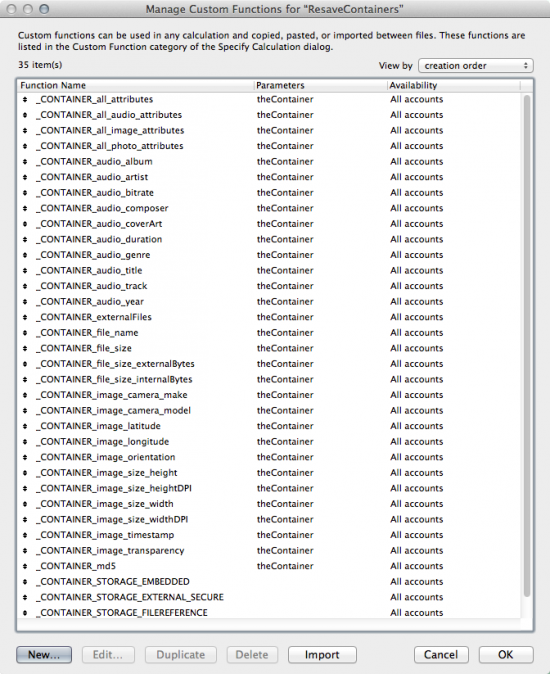

First of all, I’ve created custom functions for all 30 of the different attributes you can ask about a container:

So instead of doing this (and having to remember the name of the attribute):

GetContainerAttribute ( ContainerData::myContainer ; "storagetype" )I can simply do this:

CONTAINER_storageType ( ContainerData::myContainer )On to the script:

- The first thing we do is to check if the container data is stored as a reference. If not then there is nothing to do.

- If the container file is a reference, we ask for its name and also determine the file type (image, movie, file,…).

- When we have all the info we need, we export the referenced file to a temporary folder (FileMaker creates that folder for the duration of the script and then cleans up after itself when the script ends).

- After extracting the file we empty out the container.

- Then, re-insert the file from the temporary folder. This is where we need the file type so that we can call the proper “Insert” script step.

And we’re done.

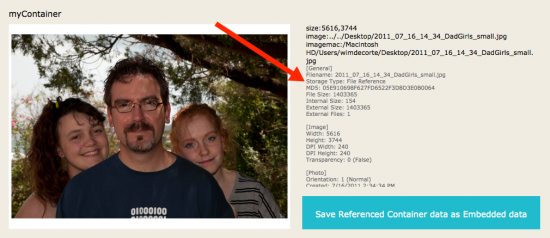

Here is a screenshot of the container and its info before running the script:

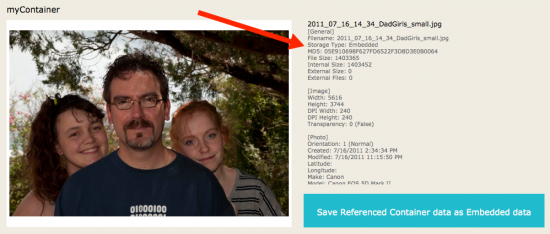

And after running the script:

Get the Demo

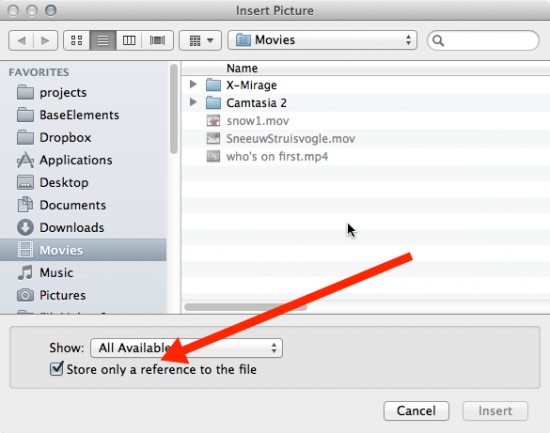

To try it out, manually insert a file into the container and make sure to set the “by reference” toggle:

Happy New Year!

#

Allow User Abort [Off]

Set Error Capture [On]

#

#

Set Variable [$tempPath; Value:Get ( TemporaryPath )]

#

# check if the container is by reference

If [_CONTAINER_storageType ( ContainerData::myContainer ) = _CONTAINER_STORAGE_FILEREFERENCE]

#

# it is, do an export field contents and then insert it again

# get the file name

Set Variable [$containerAsText; Value:ContainerData::myContainer]

Set Variable [$fileName; Value:_CONTAINER_file_name ( ContainerData::myContainer )]

#

# figure out what file type it is

Set Variable [$type; Value:LeftWords( $containerAsText ; 1 )]

If [Right( $fileName ; 4 ) = ".pdf" and $type <> "PDF"]

Set Variable [$type; Value:"PDF"]

Else If [$type = "size"]

# probably an image, the path is on the 2nd line, first line is the size

Set Variable [$type; Value:LeftWords( GetValue( $containerAsText ; 2 ) ; 1 )]

End If

#

# construct the temp path and file name

Set Variable [$temp; Value:"file:" & $tempPath & $fileName]

#

# export

Export Field Contents [ContainerData::myContainer; "$temp"]

#

# delete the container and commit

Set Field [ContainerData::myContainer[]; ""]

Commit Records/Requests [Skip data entry validation]

#

# now get the container back

Go to Field [Select/perform; ContainerData::myContainer]

If [$type = "image"]

Set Variable [$temp; Value:Substitute ( $temp ; "file:/" ; "image:/" )]

Insert Picture [$temp]

Else If [$type = "PDF"]

Set Variable [$temp; Value:Substitute ( $temp ; "file:/" ; "image:/" )]

Insert PDF [$temp]

Else If [$type = "movie"]

Set Variable [$temp; Value:Substitute ( $temp ; "file:/" ; "movie:/" )]

Insert Audio/Video [$temp]

Else

Insert File [ContainerData::myContainer[]]

End If

#

Commit Records/Requests [Skip data entry validation]

#

End If

Hi Wim,

I would like to backup the external container. Will I cause harm to the database as a whole or the contents of the external container if I use a third party backup solution to capture the external container? Thanks for any help.

-L

Hi Lorenzo,

There is a big risk there. The external container data really needs to be treated as any other live FileMaker file; that means no OS-level sharing, no external backups,…

Since that data gets backed up properly when FileMaker Server runs a backup schedule, you can use an external backup program that targets those FMS backups. Provided you time it so that the external backup application does not try to grab files as they are actively being backed up.

Dear Win,

Excelent solution!

Thank you =)

Neat and useful example 🙂

I’ve noticed that:

GetValue ( Table::someContainer ; 2 )

returns the filename of a previously reference file. This may be passed directly to e.g.:

InsertPDF

on an interactive container field, thus saving the export.

CHS

Hi Christian,

Can you expand a bit on how and when you see this?

Best regards,

Wim

For some reason, it’s still only returning a reference file for me…

Hi Jenn,

I would have to see some screenshots or some data to see what is going on. Feel free to post to FMforums.com or the FileMaker Community forum.

Hi Wim,

How can I get this to work with Windows. I’m getting file path errors and substituting \ for / does not seem to work.

Hi Marty,

It should work just fine on Windows – just retested with FMPA15v2 on Windows 10 with a picture and a regular file. What is your content type?

I just took an app from FM12 to FM15. Had filenames of referenced files in the records. I made a new container field with OPEN STORAGE, ran an insert script like yours above. My file size blew up. My storage type attribute says “External (Open)”. It did store the files in the directory structure I specified, but it is apparently also embedding. Also, I see title of your article is “…to embedded”. Am I misunderstanding? I want external storage…not embedded. What might I be doing wrong?

–Thanks for your article; I’m almost there…

Hi Carol, the technique and script used for the article does indeed aim to “embed” the file in the sense of not only storing the reference to the file but the file itself. Depending on whether the container in FM is set to use remote container (RC) storage or not, the embedding will store that file internally or in the RC folder. I would have to see your file to help troubleshoot. Feel free to post on https://community.filemaker.com.

Hi Wim, great article! I only get an invalid value (?) for the “duration” parameter if the audio file is shorter than 7 seconds. Any idea why? Thanks

Thanks! Haven’t come across that particular issue, why don’t you post it on the FM Product Issue forum and see if others have experienced the same: https://community.filemaker.com/community/discussions/report-an-issue

It is a great article but I cannot get storageType with either method, I only get a “?” in the field. I used these methods with my container field named “_f_SiteMap”:

GetContainerAttribute ( _f_SiteMap ; “storagetype” )

CONTAINER_storageType ( _f_SiteMap )

Am I missing something?

Thanks!

Just re-tested in FM15 and the only time I get a “?” as the result is when the container field is empty. If that is not the issue then feel free to post on http://community.filemaker.com or http://fmforums.com with specifics about your solution.

Sorry for the late update but it was the indexing being turned on. Forgot to update before.

Hi Wim,

My database has grown to about 50GB, simply because i do store 4 images for each record.

1. field image

2. context image

3. lab image

4. prep image.

Browsing these records has become an issue.

I need to to reference these images and store them externally to reduce the size. I need the external storage file to have subfolders as per the 4 image sets. Help me achieve that. Thanks

Derek Vanderby (our business manager) will contact you about this. There are a few options here. The relatively easy one is to start using remote container storage so that the main file remains lean. The other option is to forgo regular FM containers and use an external document storage; we recommend AWS S3.

The performance while browsing is something we’d have to look at, that’s mainly a factor of how the solution is designed.

Hi Wim,

You kindly provided a link to this article in answer to a question I posted on the FM Community pages yesterday (30 Dec 2021), in connection with issues arising out of my attempts to set up a separate archive to handle ‘referenced’ container content. In the course of those exchanges, you and others suggested changing to embedded storage would be an easier and more robust way to achieve the same ends, and that seemed good advice.

The methodology and rationale set out in your article largely mirror what I had aleady started to write to implement this change-over, but reading your response to Lorenzo’s query (SEPTEMBER 18, 2014 AT 12:13 PM, at the top of these comments) about backup issues stopped me in my tracks rather, and makes me wonder if this approach might not be so good after all in my particular situation.

My particular application is for personal use at the moment, with just myself and immediate family as users. It runs on my personal iMac and is shared using filemaker’s inbuilt file sharing, i.e. it does not run on filemaker server, or even a dedicated machine. Container data is currently stored by reference to the original file, with the attendant risk of losing content if the original is moved. I therefore planned to create and maintain a separate directory structure to hold the image files, controlled by filemaker but located on in a shared Dropbox folder and still using file references rather than embedded storage. I felt the advantage of this would be a reduced application file size, improved responsiveness of the application generally over our low bandwidth network, and faster download of image files from the local dropbox archive into the container field when documents need to be displayed. I perhaps naively considered the attendant read/write processes to the Dropbox archive could effectively be treated as isolated input/output akin to filemaker sending job to the printer, and that the whole thing – application and and image archive – could be backed up normally by time machine. However, you and others strongly advised against using dropbox because, as I understand it, of the risk of synchronisation issues causing corruption and I accordingly I decided to go with embedded storage and accept a possible hit due to our slow wifi. However, your reply to Lorenzo implies that your advice may have been predicated on an underlying assumption that back-ups would always be handled by FM Server’s integrated backup, which I don’t actually have and don’t otherwise need.

So, in short, can you advise please whether believe that reliance on time machine for backups of both the filemaker app and its container storage would be wise? Count that compromise file integrity?

Peer-to-peer sharing is deprecated and has historically been problematic as people tend to put the actual FM files in OS-level shares, leading to long-term damage to the files.

Putting any part of a live system under ‘shared’ control between FM as the host and another system like Dropbox is also problematic and it will inevitably lead to problems. FM needs to maintain exclusive locks on all of its files. With RC data the problem doesn’t usually manifest itself as quickly or dramatically as with an actual FMP file but the principle is the same.

If you are going to use Dropbox, then use its APIs and let Dropbox manage the files, not FM. Use FM only to store metadata about the files. That way you can use the many features that Dropbox has already built and your solution will behave the same no matter where the files are.

As to peer-to-peer sharing: I would strongly suggest reconsidering that. The basic entry-level FM license has 5 seats and the rights to use FMS. Two to 3 individual FM Pro license are usually more expensive than this entry-level ‘team’ license. And once you have FMS in the mix you get the benefit of better performance and live backups.

Time Machine: don’t do it. This is just another process that will try to work with the files and might/will interfere with FM doing its host job. Here too, FMS gives you the tools to do these kinds of live backups but FMP does not. For an example on how you can use Time Machine for snapshotting purposes with FMS, read this blog post: https://www.soliantconsulting.com/blog/filemaker-backup-strategy/

Hi Wim

I have 6 terabytes of images and on a test transferring from secure to open storage takes a week. We have about 15 containers that need transferring, and each one takes a week.

We host the database and users use it 24 hours a day!

I don’t want to transfer the images while users are adding/editing the containers that are being transferred. Neither is having downtime for many weeks an option.

Is there a way to take a backup, do the transfer on the backup copy, then overwrite the copy with the latest FM live file, then edit the live file (via a script probably) to change container attributes, to point to open storage? If this was possible, then there would be no downtime as the transfers would have already been done.

I’ve researched and tried but don’t think it’s possible.

Maybe it’s not an issue, but i’m scared of transferring a hosted live file that’s in constant use. Oh what pickle. Any help gratefully appreciated!

Hi Jamie,

There are a couple of options that I see, some depend on the actual design of the solution, and offloading the work to something outside of FM that can work with FM through its APIs.

With that much data though we would probably want to stay away from actual container fields in FM and use a file/document management system that does that very well, and that has APIs. FM is extremely good at that kind of integration and FM can then work with the metadata: the data about a file, without having to store or manage the actual file.

Could be as simple as AWS S3, box.com, OneDrive/SharePoint, dropbox…

without reference file how i will show images in all users

Showing images to all users in FM solution is just a matter of using the container field. You embed the picture into the container field and you have the option to leave it embedded (stored inside the actual fmp12 binary file) or use FM’s “remote container” feature where FM will manage where the picture is stored.

If you don’t want the pictures stored in and by FM then you can integrate with any document management system that has APIs: box.com, dropbox, one drive, Sharepoint, S3, NextCloud, OwnCloud… there are many great options here.