Have I told you lately…that backups are important?

Over the years, I’ve spent a lot of time writing and speaking about what it takes to set up a solid deployment and how a good backup strategy plays a crucial role in the overall plan.

But…all too often, we see deployments where backups do not happen. Not because the business owners don’t want them to happen but because the performance impact is just too much. Running the backup interferes with the overall user experience to the point that most disable them. Or, they set them to only happen once or twice a day or even just once a week. Sometimes, the team deploying the solution just has a flawed understanding of how FileMaker Server does backups, including non-standard options and how to make them work properly.

We think that is just plain wrong. And we’re happy to help think through some alternatives.

How much data are you willing to lose?

Let’s start with the basic questions to answer:

- How much data are you willing to lose?

- What is the maximum amount of time you can live without you system?

The answer to the first question cannot be “None.” With the current state of the FileMaker platform, it is not possible to have a hot standby where every single data change is simultaneously written to two servers. That means that you will always have to revert to your latest backup. And you will lose the data since that last backup was done. The thing to do then is to set up a backup frequency that guarantees that you will have a backup that is not older than your goal.

The second question speaks to how quickly you can retrieve the relevant backup and host the files again. If the original machine with FileMaker Server is still available, then that is really just a matter of copying the backup to the proper location. But if the FileMaker Server machine is not operational anymore, then this may mean standing up a new server in the same location or in a different location.

Leveraging High-Availability Configurations

At DevCon 2010, I presented a session on how to set up two FileMaker Servers in a high-availability configuration. One server hosts the live solution. A second server hosts a copy of the solution plus the audit log file from the first solution and uses a server-side schedule to read the audit log and roll all the changes into the standby copy. That setup ticked a few boxes in the realm of high availability as a hot/warm standby since we could very quickly switch over from one server to another and do that with minimal data loss. This setup did not use FileMaker backups to achieve its goal and is obviously fairly expensive both in development time (to have robust audit logging) and in infrastructure (having two servers running at all times).

But it serves as an example of how thinking outside of the box can be the answer.

One thing that this construct did not provide, though: multiple restore points. What if you want to go back to a backup from yesterday afternoon to retrieve a record that was accidentally deleted? For that, you’ll still need to run multiple backups.

Multiple Backup and Restore Points

FileMaker Server Pro has two basic built-in backup mechanisms:

- Backup schedules that run at a set time. These take a copy of all the hosted files and creates a date-time stamped folder. The settings per schedule allow you to define how many backup sets you want to keep. FileMaker Server will manage that by deleting an old backup after it created a new one to keep to your requested number of sets. Each set is a point-in-time restore point.

- Progressive backups that run after a set interval of time has elapsed (every x minutes). FileMaker Server keeps two backup sets of the hosted files, collects all changes that are made during the interval, and then rolls those changes into the oldest of the two backup sets. The default setting is every 5 minutes which means that you will always have a backup set that is at most 5 minutes old and another one that is at most 10 minutes old. Progressive backups are usually less taxing on the server’s disk i/o than a regular backup schedule. But one thing that the progressive mechanism does not provide you is the ability to go back in time beyond the interval times two. By default, for instance, you cannot use it to go find a backup from an hour ago.

The choice then really is not between using backup schedules OR progressive backups but finding the right combination of schedules and the progressive interval. One option could be to set the progressive backup interval to 10 or 15 minutes and use an hourly backup schedule. “But it is slow!” I hear you say. And I also hear, “But my solution is 100GB; I can’t take twelve backups per day. It would fill up my hard drive!”

Misunderstanding Backup Systems

The inherent efficiency of both backup mechanisms is often misunderstood. Knowing how it works is very important because it can help in how you architect your solutions to take advantage of it and achieve the quickest possible backups. Hard-linking makes this possible.

Hard-linking

When hard-linking became part of how FileMaker Server does backups (in FileMaker Server 12), Steven Blackwell and I wrote a white paper with a lot of technical under-the-hood details. While that document is certainly old, all of what is in the white paper is still valid.

The short version: while every single backup set that FileMaker Server creates is a full set, that does not mean that it actually consumes the exact total amount of disk space of all your files combined. The magic of hard-linking is that it does not require disk space for files that have not been changed between two different backup runs of the same schedule.

The misunderstanding around this feature often stops developers from even considering using frequent backups. Say that your solution is 30GB, but most of that is in Remote Container data (say 25GB of it); the rest is in one FileMaker file that is 5GB in size. Since most of the RC data will not change over time, each backup run will need 5GB (assuming that some data in the FileMaker file itself changed between backup runs), plus whatever new or modified container data it is.

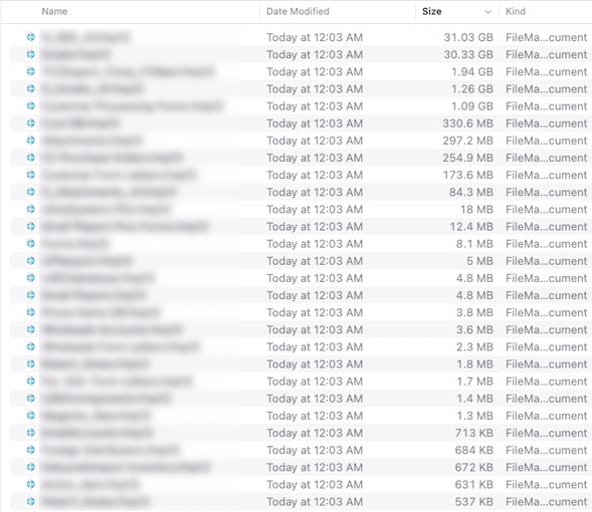

Say 100MB worth of new pictures were uploaded. That means that the next backup run will consume 5GB + 100MB of disk space. Not the full 30GB + 100MB. Yet when you look at your backup in macOS Finder or Windows Explorer, you will see that it shows up as the full 30.1GB. FileMaker Server backups are highly efficient on both the disk space used and the time it takes to perform the backup.

So don’t let the total size of your solution drive the conclusion that you cannot do frequent backups. Especially if you can leverage Remote Containers and/or can split your FileMaker solution into different files with the static data that does not often change in a different file than the data that does change often. Or by archiving historical data into their own static file.

Monoliths

But what if the solution you are working with is, in fact, a monolith? There is just one file, and it is huge.

Unfortunately, when you modify a single field on a single record, FileMaker Server will use up the full size of the file on the next backup run, and it will need whatever time it takes to make that copy. In our example: adding just a single character to a field in the solution will need the full 30GB of disk space to store the new backup of that big FileMaker file.

But the solution still is not to reduce the frequency of the backup and leave the business with potentially more lost data than they are comfortable with or to keep fewer restore points around.

Snapshots

Most deployments have the ability to use volume snapshots. Either as supported by the operating system itself, which works for a physical or a virtual machine, or externally by the technology that provides the virtual machines.

Volume snapshots are both extremely fast to produce, and they store only the difference between the current and last snapshot, so they are extremely efficient in their disk space usage. But they have to be done with the proper care to produce a valid usable backup. FileMaker Server has to be in the paused state during the snapshot. Without the pause, the FileMaker files contained on the snapshot will be improperly closed at best and damaged at worst.

External Snapshots

If you are using virtual machines, then you can also use snapshot capabilities provided by the surrounding infrastructure. Either through the hypervisor software or the equivalent technology used by the instance provider.

soliant.cloud Snapbacks

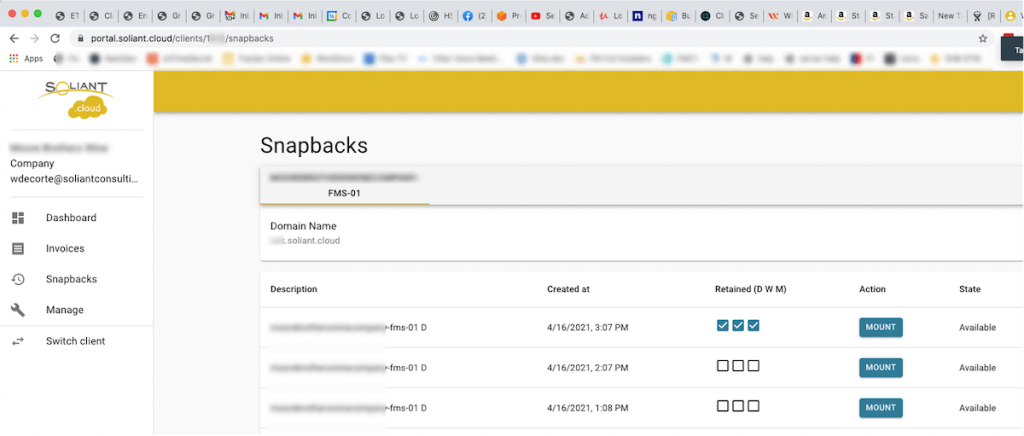

We use AWS for all of our cloud hosting and use the AWS toolset as the basis for a very high-performant backup mechanism using EBS snapshots. Restoring from a backup is just as critical as taking the backup; our client-facing portal lets our developers and clients retrieve any snapshot and mount it back to their server to retrieve the available files. We don’t use the native FileMaker Server backups. Snapshots are much faster, and the restore experience we built is well-crafted and entirely intuitive. We call it Snapbacks because it includes the mechanics for both taking the snapshot and getting the files back.

The Claris FileMaker Cloud server uses something similar, illustrating that the approach is an accepted mainstream backup method.

With the proper care, using snapshot technology available to you can produce incredibly fast backups that are very efficient in the amount of disk space they consume. The example later in this blog post will put some numbers around that.

Internal snapshots

All operating systems have a native method for producing volume-based snapshots. Compared to external snapshots, the main drawback here is that it does consume some disk space on the machine itself.

Each snapshot only has to store the data blocks that are different from the last snapshot, so it is very efficient. Snapshots do not work at the file level; they don’t copy whole files. Snapshots operate at a deeper level, much better suited for this kind of block-differential comparison. The amount of disk space required factors in the number of snapshots taken and the number of blocks changed between each run. Over time it can add up and reduce the amount of disk space you have available. You can store many more snapshot backups than you can file-based backups, but it is still a very important difference from external snapshots and something to be aware of.

Windows

The white paper linked earlier has an example of how to use the Windows Volume Snapshot service (vss) to instruct Windows through the command line to take a snapshot. Assuming you have your live FileMaker files on the D: drive, then taking a safe snapshot comes down to this:

fmsadmin pause -u admin -p adminpw -y

vssadmin create shadow /for=D:

fmsadmin resume -u admin -p adminpw -y (If you have been around the FileMaker platform for long enough, you will recognize this as the way FileMaker Server did backups before version 7 — with a copy of the files in between the pause and resume).

The pause before and resume after are absolutely critical because it is the only way that we can guarantee that FileMaker Server can put the files in a safe state for outside manipulation.

FileMaker Server is not snapshot aware, and without the pause, any files that you retrieve from a snapshot will come back as improperly closed and will require a consistency check. And sometimes they will come back as damaged. Pausing suspends all user activity and makes FileMaker Server flush its cache to disk. The natural fear here is, of course: doesn’t this affect the users? In practical terms, it does not. The snapshots are so blazingly fast that this whole operation typically takes less than a second.

macOS

While you can find a lot of information on Windows VSS functionality, there is virtually nothing in the macOS realm about similar functionality. But it definitely exists.

We recently had to explore this for a fairly typical deployment: an old solution with big files that prevented regular backups. The client has a FileMaker solution that has been around for 10+ years and has grown organically. Things that used to run smoothly are not that smooth anymore as the file sizes have inflated with a lot of historical data: the two biggest files are each over 30GB in size, with a few other files over 1GB. In order to achieve some efficiency, most container fields have already been converted to Remote Container storage; that in itself accounts for 10GB of data. The whole solution consists of 40 files and 77GB combined.

Running a full backup on their Late 2012 Mac Mini during busy office hours takes more than 15 minutes. (This is in part because the machine is not truly dedicated to FileMaker Server and acts as the Open Directory server and as their file server.)

The two biggest FileMaker files always change between every backup run because those are the files that the users do most of their work in. So every backup run does need 60GB+ of new disk space, and the machine only has about 200GB of disk space left.

Normal backups are then too slow, and you do not have enough disk space to do frequent backups. The backups were reduced to three per day: 7 am, noon, and 5 pm. But the business is very uncomfortable with potentially losing half a day of work.

Faster Backup Options

Possible paths to faster backups that were ruled out by the business immediately:

- The client had no budget for switching to a different and faster server. A newer Mac Mini would be the obvious choice; our tests show that the backup time goes down to about 3 minutes per run on a 2018 Mac Mini i3. Which is still too high for, say, a 15-minute schedule and possibly even too intrusive for an hourly schedule.

- The solution is not optimized and ready for cloud-hosting, although the performance impact to the users would be huge. We can’t take advantage of technology that would allow us to take efficient external snapshots.

- No desire to spend money on development to make the files more efficient and achieve faster backups this way (in this case, archiving old data would be the way to go). And that would not be a quick fix anyway, as this requires an understanding of the use cases where the user does want to search current and old data and how to show the right data at the right time.

But we did have to do something quickly to allow the client to have more than just the few backups that they were operating under.

Time Machine

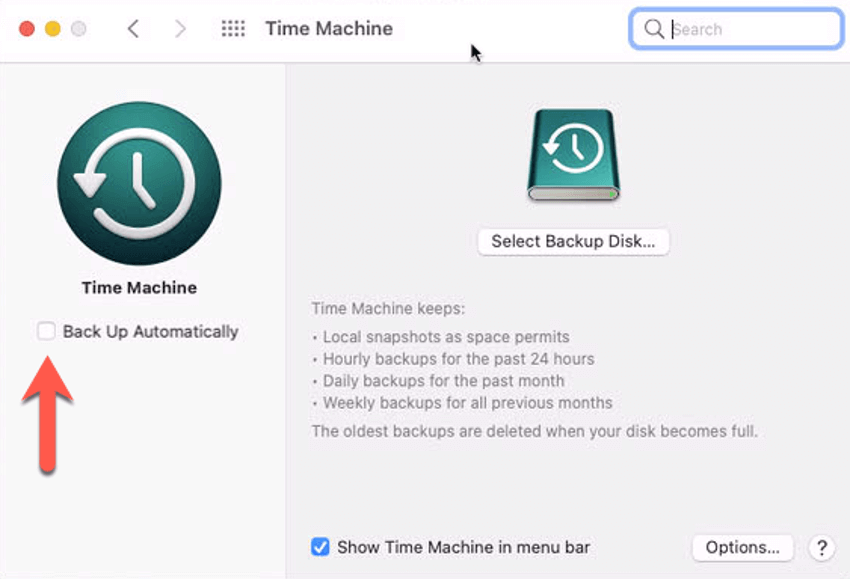

Enter Time Machine without actual Time Machine backups.

Time Machine is the obvious choice for macOS backups, but you cannot let it touch the live hosted FileMaker Server files nor the backup folders since it may interfere with FileMaker Server while in the progress of running a backup. The files restored from TM would be ‘improperly closed’ and potentially damaged. That means that you cannot let Time Machine run automated backups.

But you can use the underlying snapshot technology to run your own backups.

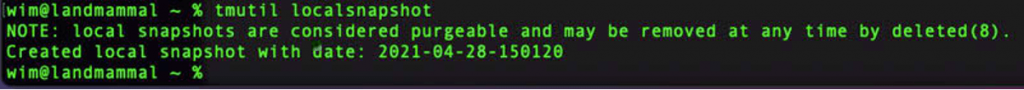

And just like with the Windows VSS example, it starts with asking FileMaker Server to put the files in the paused state, then running the snapshot and letting FileMaker Server resume normal operations:

fmsadmin pause -u admin -p adminpw -y

tmutil localsnapshot

fmsadmin resume -u admin -p adminpw -yWhen you take a local snapshot, there is a very important note to consider:

Time Machine will make its own decisions about how long to retain the snapshots, and much of it depends on the amount of free disk space. The other factor is age; it will automatically remove snapshots based on its internal (and unmodifiable) age tracking logic.

This warning is a solid reason why this snapshot approach is a good supplement to the overall backup strategy but should not become the only way to do backups on macOS.

You can view what snapshots have been taken with this command:

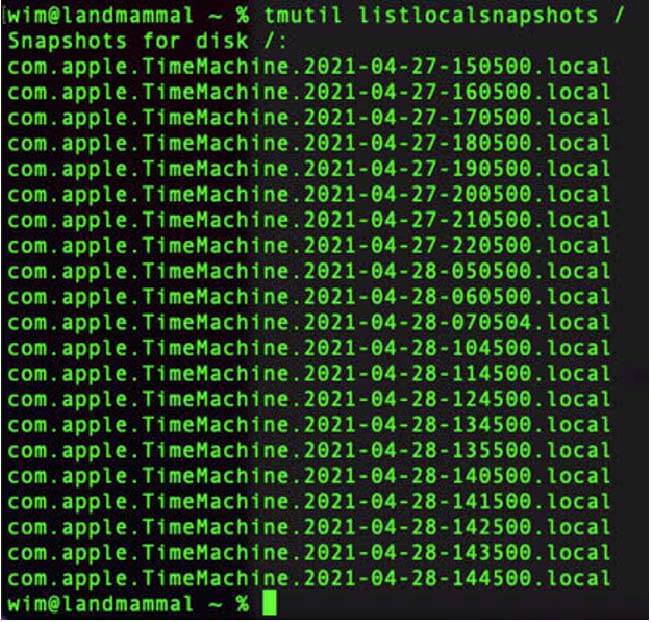

tmutil listlocalsnapshots /

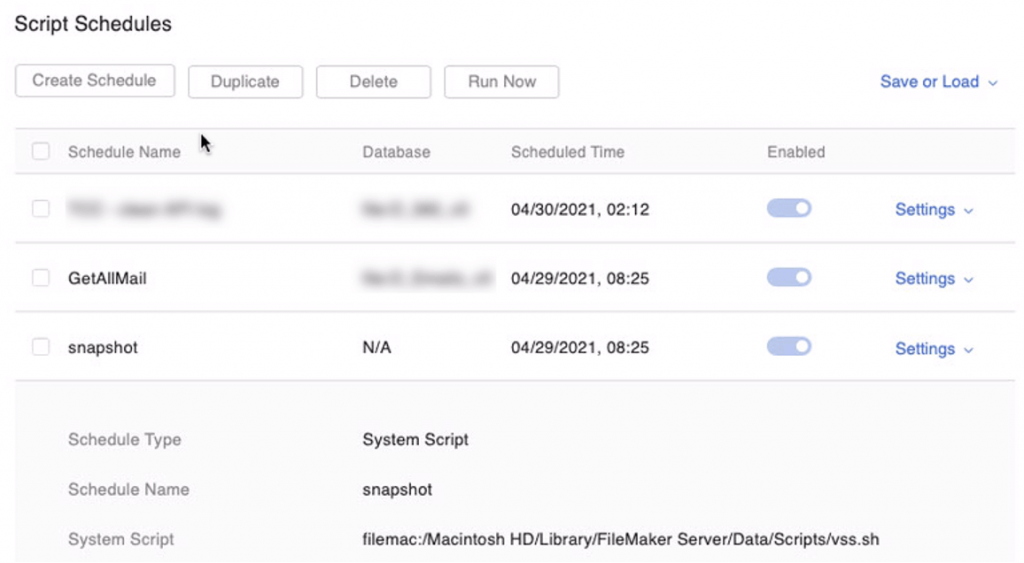

In this deployment, we have snapshots done through a shell script scheduled by FileMaker Server every 10 minutes. Time Machine remains a snapshot for every 10 minutes of the last hour, then fifteen hourly snapshots. We have no control over this.

When you stop taking snapshots (when you stop the schedule or FMS is not running), after an hour, the 10-minute ones will drop off; then, every hour, one of the hourly ones will get removed. These are not persistent backups! To schedule the snapshot backups, we wrap those three command lines into a shell script and use the FileMaker Server schedule to run it every 10 minutes.

The two big files receive new data constantly through the GetAllMail schedule.

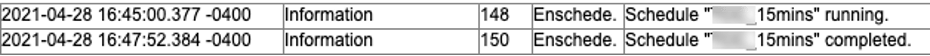

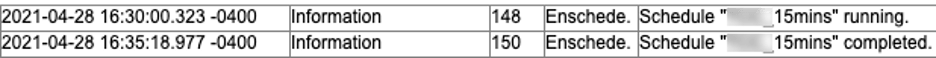

A regular backup schedule takes between three and five minutes to complete depending on how busy the machine is at the time (mostly on the disk i/o side).

and

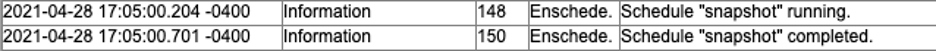

But when we look at the FileMaker Server event log, we see that the snapshots consistently take about half a second to complete. You’ve read that correctly: sub-second performance.

That definitely solves the problem of setting up a backup strategy that is frequent enough to allow the business to lose a minimal amount of data on an outage in between their regular scheduled backups.

Now that you have snapshots: how do you restore files?

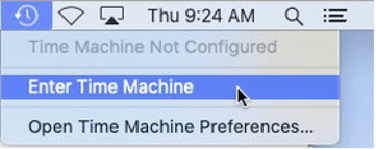

Since we are using the underpinnings of Time Machine, we can actually use the Time Machine UI to restore our files. Go into Time Machine:

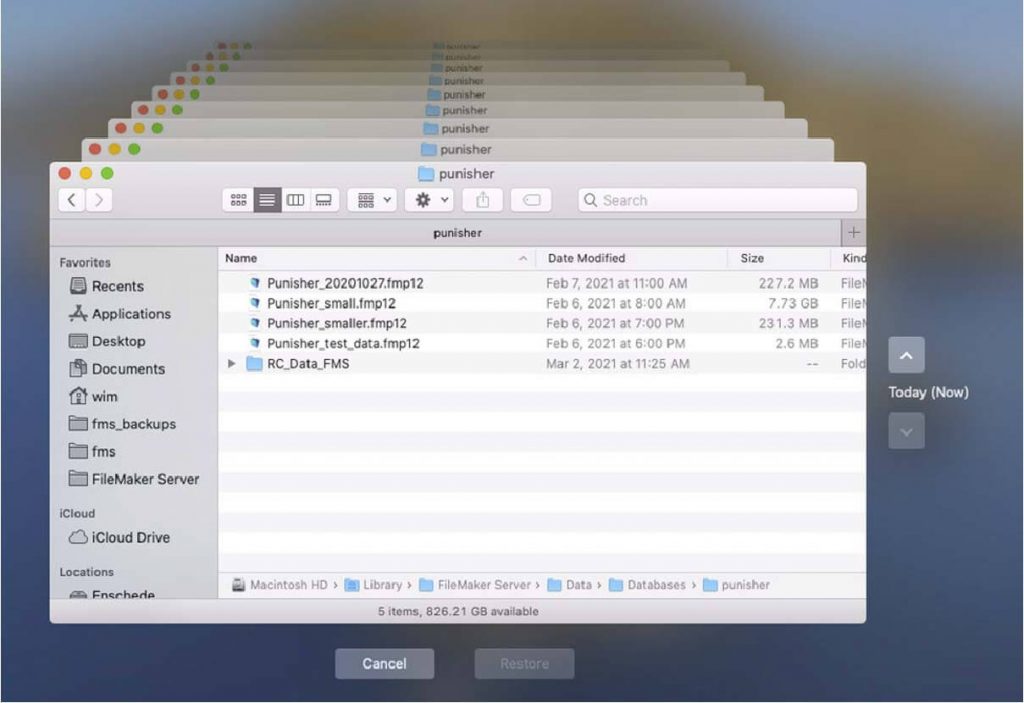

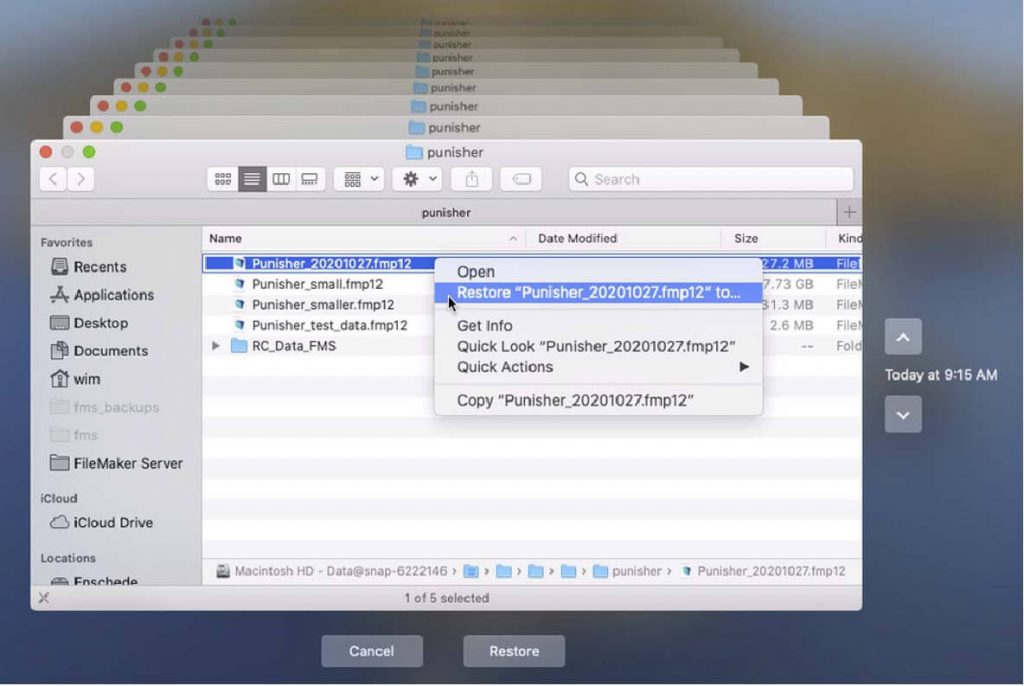

This will give us the ability to use the navigation to go back through different snapshots and select one or more files to restore:

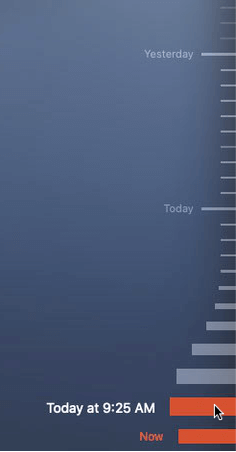

If the regular up/down arrows on the right do not give you access to all your snapshots (which I have found Big Sur to do occasionally), you can use the slider on the right of the window to select the proper snapshot:

Once you have located the files you want to get back, you can restore them to your hard disk:

I would highly recommend restoring to some staging folder so that you have a chance to inspect the file before you press it into service.

It is at this point that you are trading off some backup performance for restore performance.

This is where you will incur the copy-time penalty that you otherwise would have at every backup: macOS needs to copy the selected files over to a place on the hard drive. To do that with 60GB+ of files takes two and half minutes on our test Mac Mini. About the time it takes to do a regular backup.

But since restores are a lot less frequent than backups, this is a very worthwhile trade-off to make: half a second backup time in return for a few minutes of restore time.

Snapshots are a very fast backup mechanism and can be a very useful addition to the overall backup strategy, provided that they are done safely.

When you can use external snapshots (like AWS EBS snapshots) then that can be set up to be the main backup mechanism, provided you are familiar with the restore procedure and can execute that quickly. Most technologies in this realm lend themselves very well to automation through APIs, which is how we perfected our client portal for restoring from AWS snapshots.

Internal Snapshots can be used to great effect in the scenarios described above, where the size of the files and the performance of the server makes regular FileMaker Server backups painful. But when you use internal snapshots: that should not be the only way you do backups. Make it part of a bigger strategy that involves regular FileMaker Server backups and FileMaker Server progressive backups. And we would add: adjust the architecture of the solution to avoid big monolithic files stuffed with many tables with a lot of data that almost never changes once generated.

What internal snapshots do not solve:

- Off-machine and off-site backups. In-machine snapshots stay with the machine. External snapshots (like AWS snapshots) can more easily be kept in different and redundant locations.

- Persistent restore points. Here too, it depends a lot on the technology used. The macOS snapshots can be removed at any time. Windows snapshots are a little more persistent but will still get purged when the maximum allowed disk space reserved for snapshots gets exceeded. AWS snapshots, on the other hand, are completely under your control, and you get to put life-cycle rules around them to fit your need.

Best Practices to Efficient Backups on FileMaker Server

There are some other best practices that relate to how efficient backups will happen on your FileMaker Server:

- Dedicate the server to only the FileMaker Server task. Anything else you run on the server and especially anything that adds load to the disk i/o is going to make backups slower.

- Separate the live files and backups onto separate volumes. Snapshots are always volume-based, so you can limit what is being backed up by making good use of different volumes in your FileMaker Server.

- Avoid cloning and verification for the regular FileMaker Server backups during the business day. These two backup options add time to the backup run. Do these tasks only for backups that happen when the machine is not under load.

- If you are thinking of incorporating snapshots into your backup strategy, use the Belt-and-Suspenders approach and expand those simple snapshot scripts to do proper logging and alerting, build in some exits, for instance, when FileMaker Server cannot pause the files. And certainly, monitor the overall free disk space on your server.

As always, do reach out to our team when you have any questions about making your FileMaker Server deployment stable, secure, and performant.

Great article. When our production servers go down the stress our staff feel, not to mention the frustration our clients feel, is not healthy. Especially when a file doesn’t open and it takes hours to be recovered. Thanks to this article we will start using hourly snapshots. We were thrilled to learn that when we pause even a busy server, the snapshot is so fast that users do not even notice the pause. We’ll have to do some coding to only keep the two most recent snapshots on disk, but what this means is that in a worst-case scenario when a server goes down, and a file doesn’t open, we can get the client back up quickly and all they lose is, at most, the last hour of data. This is huge.

Once again Wim, thank you for sharing your knowledge with the Claris community.

Hello Wim

Thanks for this great article. I will definitely use this backup startegy. Is there any chance to get the snapshot shell script from you? That would be super nice.

Kind regards

Rasim

Hi Rasim,

The content of the core shell script is listed just underneath the Time Machine screenshot. Ideally you’d want to flesh it out with the error trapping you find appropriate.

Best regards,

Wim