Driving Value with Salesforce Integrations

As Solutions Architect, I often get into situations where Salesforce is not the only sheriff in an enterprise system’s town. My team and I must integrate the platform with other enterprise systems to help our clients deliver on the promise of a unified customer experience. Salesforce ends up owning a part of the experience but then hands off to other systems and picks back up again at another touchpoint. In such situations, we need to ensure a robust integration of the systems involved and a seamless transfer of data.

Of course, Salesforce already has a rock-solid API, but as an architect, I’m looking for loosely coupled systems to avoid point-to-point connections — systems with suitable layers of abstraction between them, if I can help it. Yeah, believe it or not, that’s a thing with architects but beyond the scope of this post. Feel free to google it!

Salesforce Integrations Using Platform Events and Change Data Capture

In this post, I’ll describe how to loosely couple Salesforce with other systems within an enterprise using Platform Events and Change Data Capture (CDC) – two exciting and recent entrants to the Salesforce stables.

In one instance, a client wanted to build a 360 view of a customer with many internal and external systems in play. These included:

- Data files coming in from external parties such as government bodies, private third-party administrators, etc.

- Master data management system (MDM)

- Training management and delivery system

- Data warehouse system

- Salesforce Marketing Cloud

Customer data needed to be suitably mastered and then exchanged across systems seamlessly. And of course, Salesforce needed to exchange data with a few of these systems. While the full data flow and multitude of data exchange scenarios are beyond the scope of this post, I’ll focus our attention on the Salesforce aspects and how we used Platform Events and CDC to achieve that.

Thus, I will share how to create a loosely coupled publish-subscribe data exchange mechanism between Salesforce and a particular system using an event-driven architecture. This exchanges a mastered (unique version) customer record and related data, such as employment and credentials, between the MDM system and Salesforce.

Architectural Approach

I arrived at some of key considerations for the architectural approach.

Publish / Subscribe Design Philosophy

The enterprise architect had set a goal of loosely coupled systems driven by a publish-subscribe design philosophy. As the Salesforce Solutions Architect, I decided to go with platform events on the Salesforce side to deliver on that goal, an easy choice. Any third-party system can easily publish events for Salesforce to create a Contact record representing a customer.

Salesforce, in return, can publish an event containing the record id of the Contact record for the third-party system to consume and complete the data exchange handshake. No system needs to wait around for another to do its job and receives acknowledgment when the other system does so. Easy-peasy!

Cost Considerations

We had to be cost-conscious on the Salesforce side, so we couldn’t just dream up various event types and add publishing/processing logic for each event type. That would just make the solution too expensive. We had to get creative. Enter Change Data Capture (CDC), an exciting feature on top of the already exciting Platform Events infrastructure. Just enable Change Data Capture on relevant objects, and the platform handles publishing change events for you.

You do have to remain cognizant about the expected volume of changes and daily number of events you publish. You may have to buy additional event blocks and consider the cost of doing so. In our case, we did some volume estimates and cost-benefit analysis to prove that the cost is justified.

Processing Control

We had a plan based on Platform Events and CDC, but there was a challenge. The data exchange scenarios with the MDM system meant we needed control over when the CDC event gets published for a given object. For perspective, CDC events get published automatically by Salesforce in response to create/update actions by end-users in Salesforce.

For example, the simplest case was when MDM sent an update for a previously synced contact; we wanted to make sure that Salesforce did not publish another event. Otherwise, the two systems would constantly receive update events and end up in an infinite loop. Thus, we had to adapt our approach.

We ended up subscribing to our own CDC events in Salesforce, extracting the payload, wrapping it into a custom platform event as a wrapper around the CDC event, and publishing the wrapper event only when needed. The MDM system would then subscribe to the wrapper event and not the CDC event. Problem solved!

Diagnostics, Tracking, and Error Handling

Last piece of the puzzle – diagnostics, tracking, and error handling. It’s not easy to view the event stream, trace incoming and outgoing events, and check if data is exchanged appropriately. Thus, we decided to roll our own logging mechanism. Very simplistic but very creative, saving countless hours and avoiding the blame game that inevitably ensues when two systems are involved. I sent it; did you get it? I didn’t. Did you send it correctly? You know what I mean. And for error tracking, piggyback on the same approach, i.e., publish an Error Event!

Design

With the approach solidified, we had to evolve a scalable design that wasn’t too cost-prohibitive on either system. We decided to model our custom Platform Events after the JSON structure of the CDC Event. That way, the processing logic would be pretty similar, whatever the type of event. Economies of scale leading to implementation cost savings. Added bonus – very amenable to standard design patterns for class hierarchy. We needed just two generic platform events – one for the platform event published from MDM to Salesforce and the other for the CDC wrapper platform event from Salesforce to MDM.

Platform Events Design

At the heart of the design was a generic platform event between the two systems with the following characteristics:

- Header

- Entity the change pertained to (We had four entities.)

- Type of change (Create or Update for now; more options later.)

- Change source (MDM or Salesforce)

- Payload

- Field Name/Field Value pairs of the changes for any given entity

- Each system would publish changes it deems appropriate

- The subscribing system would decide which changes it is interested in and how to process such changes

Incoming Events Processing

On the Salesforce side, we had an entire Apex class structure to process incoming and outgoing events.

Incoming events processing involved a base class and one derived class per entity.

The base class was responsible for the following:

- Orchestrating the common processing logic:

- Deserializing JSON payload

- Reading the header to determine entity type and change type

- Delegating to the appropriate derived entity class at specific points in the logic using virtual methods

- Infrastructural work:

- Logging a record for each incoming event processed

- Publishing error events

The derived classes were responsible for the necessary logic specific to an entity. This includes, for example, the steps involved in creating a record for an entity of a given type. In some cases that means simply insert so many records of an object. At other times, this means associating it with different parent records based on data attributes. Most of this work takes place in overridden virtual methods the base class called upon at specific points in the processing logic.

Outgoing Events Processing

Outgoing events processing was simpler albeit very similar in design – a base class and one derived class per entity.

The base class was responsible for the following:

- Orchestrating the common processing logic

- Extracting the CDC event payload generated by the Salesforce platform and wrapping it into a custom platform event

- Publishing the wrapper event

- Delegating to appropriate derived class at specific points in the logic using virtual methods

- Infrastructural work

- Logging a record for each outgoing event processed

- Publishing error events

The derived classes were responsible for the necessary logic specific to an entity, such as when NOT to publish the event based on the entity and its specific circumstances. For example, decide that this is a contact update vs. a contact create and thus do not publish the wrapper event. Again, all such work got done in overridden virtual methods that the base class called upon at specific points in the processing logic.

Logging Incoming/ Outgoing Events for Diagnostics

To make tracking events super easy so that the system is maintainable in the long run, we designed a custom Log Entry object to hold pertinent details of an event such as the replay id, the type of event, event payload, etc.

The first step in any event processing was to log to this object. That way, we always recorded the events flowing in and out of the system, could build a simple report on this object, and could take the payload JSON for any event and view it in any JSON Viewer to inspect input and compare it with the output.

Like I said before, this saved us countless debugging hours, needless acrimony between teams, and painful heartburn from not seeing things as they happen in real time.

As a bonus, we made sure to include a custom metadata object to stop and start such logging at will to mimic what we as Salesforce experts have learned to do with Debug Logs in general. Too much logging and using up storage space is something to avoid in all designs.

Error Events

Lastly, we published custom error events recording details, such as error codes and error descriptions, at pertinent points in the apex classes. This made it future proof. The MDM system could get and process error events just like it would a normal data event. MDM could, for example, send it to a common error logging infrastructure on their end or fix the error and resend the corresponding data event again to Salesforce, etc.

On the Salesforce side, since every event is logged to the Log Entry, we could see a report of all error events and evolve a process to triage and fix different types of errors, including informing MDM data stewards where the error spanned across both systems.

Technology Stack

The technology stack involved in this integration included the following:

- Informatica Master Data Hub as the MDM system

- Dell Boomi as the middleware layer with Salesforce Platform Events Connector

- Salesforce, of course

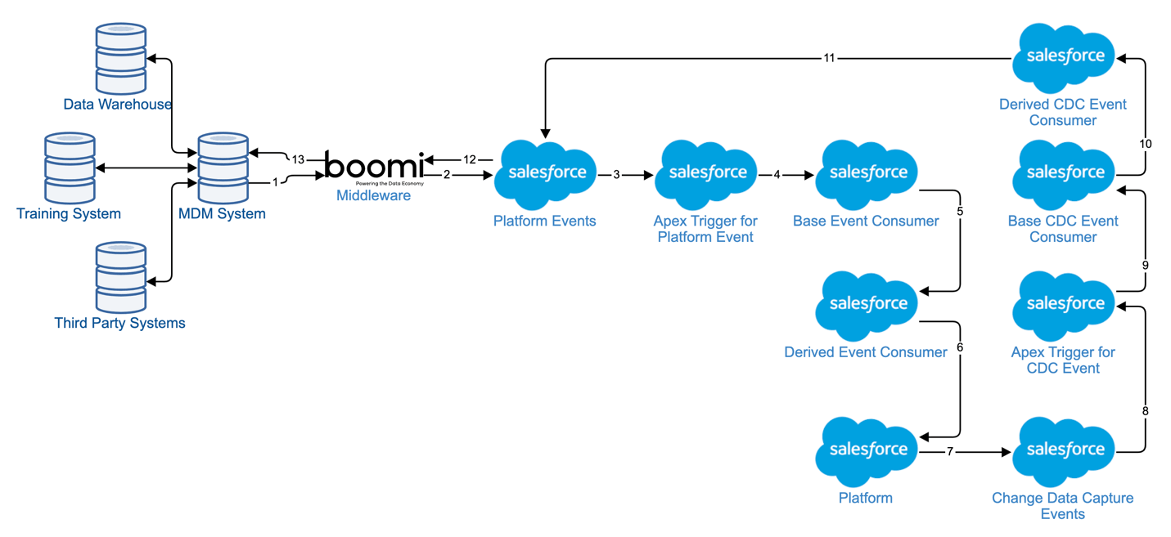

At a high level, the data flow was as follows:

- MDM sources data from diverse sources (4-5 internal and external systems) and produces master data records – either net new records or updates to existing records.

- Dell Boomi cues off of such master data records to assemble & send the relevant platform events per the event-based interface design

- Salesforce Platform transfers control to apex trigger responsible for handling that event

- The apex trigger passes control to the handler – the Base Event Consumer class

- The Base Event Consumer class logs incoming event, reads header to determine which Derived Event Consumer class to instantiate and passes control to said class

- The Derived Event Consumer class executes the necessary insert/ updates

- The Salesforce Platform generates CDC events automatically

- The Salesforce Platform transfers control to apex trigger responsible for handling that CDC event

- The apex trigger passes control to the handler – the Base CDC Event Consumer class

- The Base Event Consumer class logs incoming event, reads header to determine which Derived Event Consumer class to instantiate and passes control to said class

- The Derived Event Consumer class extracts data from the CDC event & publishes the outgoing Platform Event

- Boomi gets the event from Salesforce

- Boomi extracts relevant data and updates MDM system as appropriate

Salesforce and Postman

Another technology worth noting here was Postman. We used Postman and the Salesforce API collection (freely available for download) to unit test sending platform events to Salesforce, thereby simulating the MDM system. That way, we had ironed out all kinks on the Salesforce side long before the MDM team was ready to test jointly. Again, it saved us countless hours of the cross-team testing effort. Those can get expensive pretty quickly, especially when consultants are involved, like in our case.

Salesforce Integration Results

Cost Savings

We’ve clearly been able to deliver on the enterprise architecture goal of publish-subscribe style systems integrations. The ease with which we can build such an integration based on a combination of Platform Events and Change Data Capture has helped our clients reduce implementation costs on the Salesforce side by at least half.

Time Savings

More importantly, we were able to deliver a mission-critical integration in weeks instead of months, contributing handsomely to the client’s overall timeline goals. And the scalability of the design stands the client in good stead for years to come.

System Performance

We’ve observed a lightning-fast nature of data exchange using platform events. In preliminary tests, we’ve observed processing speeds upwards of 200 events per second. It literally got processed in the blink of an eye from receiving events to processing them to sending return events back to processing returned events in the MDM system.

Your Salesforce Integration Next Steps

In any integration scenario involving Salesforce, I highly recommend considering Platform Events & Change Data Capture as an option. It is a very cost-effective, robust, and scalable solution, and dare I say, an architect’s dream!

My team and I work with organizations to build and launch strategic integrations between Salesforce and other critical business systems. If you need help with yours, feel free to reach out to our team.

Thanks for documenting your experience !! howver i just have a small question to understand & clarfiy this dead lock scenario- on which the theme is based and solution frmwrok at Sf end is designed.

Was there a need/ scenario to make the data flow happen again back from salesforce to your –> mdm for the “contact” object ? since contact is a slave in your Sf instance which is mastered in your mdm, ideallly thinking trying to synchronize the same back from Sf to mdm (downstream to upstream) for this specific object will anyway cause this recursion in the firrtplace and we can try to avoid the need for our custom framework at Sf end to handle & encapsulate cdc with PE.

This is just to understand and enlighten myself NOT to challenge 🙂

I think I allude to this in my post. But a key consideration in any integration is the handshake ie letting the other system know that this is the id in my system corresponding to the record you sent me. That way both systems are set up for future updates in either system. SO that was the primary use case among others which I wont go into here. As far as the recursion goes, thats why we had a “suppress” CDC event under certain circumstances as part of the framework. Like you point out if it was an update that Salesforce received from the other system, nothing to send back. So suppress, avoid recursion. But if it was an insert, then send back id at the very least. Hope that helps. Thanks for your interest and comments.