I like simple rules. Like the 3-2-1 rule for backups which tells us to keep 3 backups of anything that is important, store them on at least 2 different backup media, and keep at least 1 backup in a different place.

Applying that rule to your FileMaker backups, it is only natural that the question comes up: “How do I make backups to somewhere different than the FileMaker Server machine?”

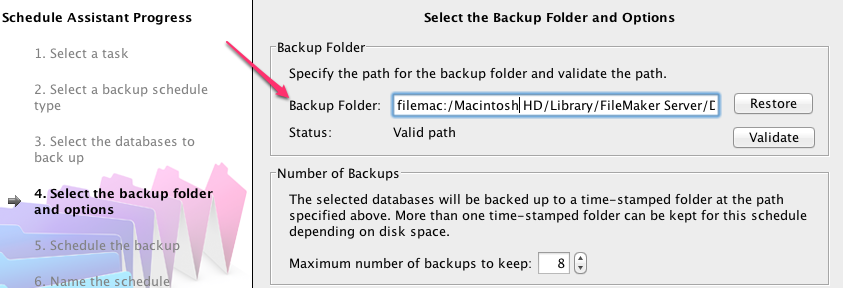

FileMaker Server lets us specify a path where the backups will be saved to, and it defaults to its own backups folder, on the same drive as where FileMaker Server itself is installed.

So can we specify a path here that would point to a network location? The short answer is no. There is an article in the FileMaker Knowledge base that addresses this. While the article is about OSX, the same applies to Windows.

At the bottom of this very short entry they specify a few reasons why backing up remotely using a backup schedule is not supported or not a good idea.

- Network backups will always take longer than local backups.

- Network connections can break for various reasons.

- There are fewer variables to deal with, in the event something should fail.

I agree with this completely. Let me expand a bit on those reasons.

It would take longer: copying across the network is always going to be slower than what the server can do with its own disks. That’s pretty obvious. Keep in mind that FileMaker Server pauses the hosted file at the end of the backup process to synchronize any changes that were made to the files since the start of the backup. That’s when the users will see the most impact. That’s what you want to minimize. So, backing up locally is going to have a smaller impact on the users. In addition, the backup will consume much less CPU time and network bandwidth when the backup is done locally.

The setup would be fragile: the completion of the backup depends on more factors now: the destination being available, the privileges having been set correctly and maintained that way. Remember that FileMaker Server – and by extension its schedules – runs as “local system” on Windows and the “fmsadmin” account on OSX. These accounts do not have write privileges to network resources. So you’d have to elevate their rights and document that in case a reinstall is required sometime in the future. If any of these factors breaks down the net result is that the backup run would fail and you would be without backup.

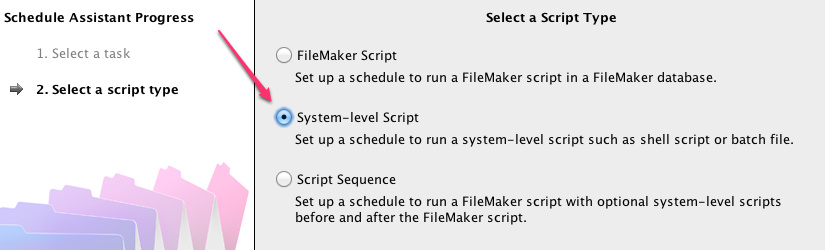

So am I not contradicting myself here? If local backups are preferred, what about the “1” in the 3-2-1 rule? We can still push out a backup to another location, using the FileMaker Server tools. We can schedule an OS-level script to run after our local backup is done to copy the backup set over to a network location. This can be done in batch files or VBscripts on Windows and shell scripts or AppleScripts on OSX.

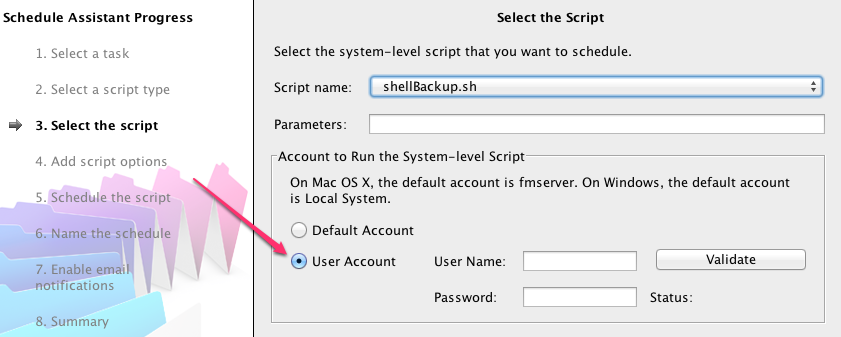

When you set up an system script you can specify OS credentials that already have the proper privileges without having to modify the FileMaker Server defaults.

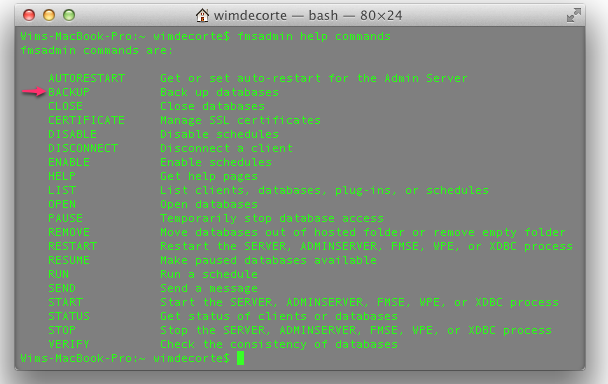

We can even avoid having to have two schedules and getting the timing just right, by doing everything in just one OS-level script. The FileMaker Server command line allows us to trigger a backup.

So with just one OS-level script we would have two backups; one local and one remote, without undue hardship on our users and without making our deployment more fragile that it needs to be.

Hi Wim, great post.

Definitively a fan of using shell scripts to roll custom backups.

We had a lively debate at a NYFMP.org meeting about the pros and cons of backing up to a firewire drive that…to quote the knowledge base article is a ΓÇ£drive connected directly to the machineΓÇ¥, perhaps with firewire 800. Wondering if you have heard any caveats regarding that set up…nothing that I can find in the FileMaker Server docs.

Thanks.

Tony

Hi Tony,

Some of the same concerns apply in the sense that the speed will be slower – making the backup longer than necessary – and the external drive has to be mounted / drive mapped for the backup to succeed, which adds a vulnerability to the deployment that may cause the backup to fail. Using an AppleScript / shell script / batch file / VBscript you could trap for that kind of error when you try to copy or move the backup set after the local backup is done but you'll always have the local backup set even the external drive wasn't available. And privileges may be an issue to overcome too.

HTH

Wim

And what about your hosts doing back ups, are these effective and sercue, and would they keep several backups dating back a while?I guess this is up to individual hosts, it’s just such a mission doing back ups if you’re not quite tech savvy! And there are so many different things on the web about backing up, but they all seem to be written in hieroglyphics for the average person

Good point. It can be one of the drawbacks of a cloud hosted server that you do not have full control over the backups. That is certainly a risk to consider and to mitigate the risk it would take frequent testing to make sure that backups are running and that the backups are valid AND that you can get to them.

Here you write:

“At the bottom of this very short entry they specify a few reasons why backing up remotely using a backup schedule is not supported or not a good idea.

1) Network backups will always take longer than local backups.

2) Network connections can break for various reasons.

3) There are fewer variables to deal with, in the event something should fail.

I agree with this completely. Let me expand a bit on those reasons.”

1). Really? We live in the age of gigabit LAN. I stream 1080p video across my network. Wirelessly even!

2). So do hard drives and servers, which is why we do a backup in the first place.

3). There’s also 0 redundancy. As a matter of fact, why have a backup at all? If your server fails, your backups certainly aren’t going to do anything.

Hi James,

Thanks for your comments. Network performance is always going to be slower than internal disk i/o, so a network backup is always going to take longer than an internal backup. Remember that FileMaker Server suspends user activity at the end of each backup to synchronize changes that have happened since the start of the backup. And that's the time frame you want to minimize. That time frame is going to be longer for a network backup. And the other aspect is risk mitigation. Backing up directly over the network carries more risk of failure than an internal backup. That is not the same as saying that a network backup *IS* going to fail, just that it is more likely. Given the importance of backups that is risk to be recognized and mitigated.

I certainly did not want to create the impression that i am advocating doing only internal backups. On the contrary. That why the article follows up on the "3-2-1" principle of backups and explains how you can use the OS tools to make sure you have backups away from the FileMaker Server machine. This approach I believe increases the chances of always having a backup to fall back on.

Re: FMS13 – Mac OS 10.9. I’ve been using Rob Russell SumWare Consulting’s shell script for many years, successfully, to backup my local server FM backups to a network drive that’s connected to DropBox. This script has been working fine until I updated my OS to Mavericks. The script runs on FMS13 fine, but it will not place the backup on my network share point any longer. I feel like Mavericks might not like fmserver being the owner of this script, but FMS reports no errors. Here’s the shell script code. Any suggestions would be appreciated.

#!/bin/bash

# GOOD 11/29/10 5 Dec 2005 Rob Russell SumWare Consulting

# only change the next two lines of this script to suit your installation

source=”/Library/FileMaker Server/Data/Backups/Daily/”

destination=”/FM_Backup/”

# source defines the folder you want to archive. Only archive

# FileMaker Server backups, not the master files hosted by FMServer.

# destination is the place you want the archives sent

# ( best this be a separate volume )

# suffix is the time stamp to append to the destination

suffix=”$(date +%Y%m%d%H%M%S).zip”

# create symlink files in tmp folder, to deal with spaces in file paths

sourcelink=”/tmp/sumware001.ln”

ln -s “$source” $sourcelink

destinationlink=”/tmp/sumware002.ln”

ln -s “$destination$suffix” $destinationlink

# copy with pkzip compression and preserve resources

ditto -c -k –rsrc “$sourcelink” $destinationlink

#tar czvpf “$sourcelink”.tar.gz “$destinationlink”

# clean up the symlinks we created earlier

mkdir /Volumes/fmprobackup

/sbin/mount_afp afp://Administrator:password@10.0.0.20/Austin/Backups/FileMaker_13 /Volumes/fmprobackup

disktool -r

# austin=”/Volumes/Austin/Backups/FileMaker_13/”

austin=”/Volumes/fmprobackup/Backups/FileMaker_13/”

cp $destination$suffix $austin

#rm -f “$sourcelink”

#rm -f “$destinationlink”

#find $destination -name “*.zip” -mtime +10 -delete

#find $austin -name “*.zip” -mtime +10 -delete

#umount /Volumes/fmprobackup

Discovered the solution… finally.

…

mkdir /Volumes/fmprobackup

/sbin/mount_afp afp://Administrator:password@10.0.0.20/Austin/Backups/FileMaker_13/ /Volumes/fmprobackup/

disktool -r

austin=”/Volumes/fmprobackup/” *** WAS “/Volumes/fmprobackup/Backups/FileMaker_13/”

…

Thanks for the follow-up, Paul!

Where can we get access to the tool used above?

Hi Aziz, that was 12 years ago 🙂

If you are after the shell script then it’s really just two lines of code: one to use the fmsadmin command to either run a backup or run an existing schedule and a 2nd line to copy or rsync the finished backup to any location you want.