A major new feature that rolled out with FileMaker Server 18 is its Data Restoration. In a nutshell: it will keep a transaction log of all the changes (data and schema) that are being made to the databases; if FileMaker Server or the server machine were to crash, it will use these logs to make sure that all of the logged transactions are applied to the database at the next startup.

FileMaker’s white paper explains the basic workings of Data Restoration. I recommend the white paper as a very worthwhile read and won’t repeat much of what it says. However, I do want to highlight some important practical aspects included in the content. Then, I’ll walk you through a crash event, show how the data restoration works, and list details to monitor.

It’s On By Default

First off: the feature is on by default when you install FileMaker Server 18. You can turn it off only by making an Admin API or CLI call to your server.

If you have not used the Admin API yet, the FileMaker community has released a good set of tools that use the Admin API. You can also use an API testing tool like the Postman to execute the necessary calls. The data restoration setting is part of the “General Configuration,” and you can check its current state with a GET call to proper endpoint:

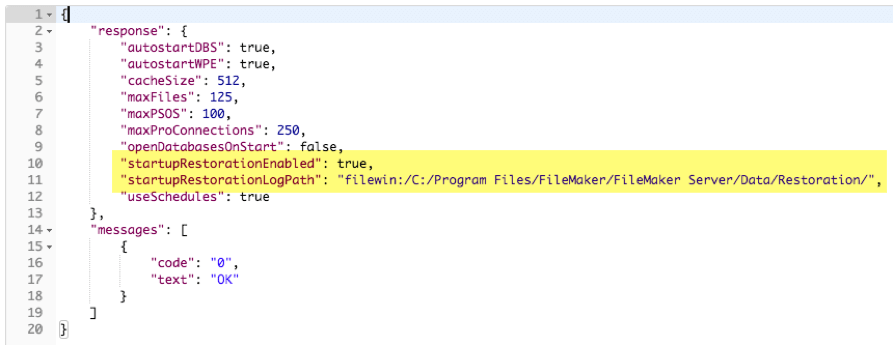

https://{{server}}/fmi/admin/api/v2/server/config/generalThe two new configuration settings are highlighted below in the JSON returned by the API call:

A PATCH call to the same endpoint is all you need to toggle it on or off. Or to change the path to where the logs are stored.

Performance Impact

Speaking of the path where the logs are stored, we see two fairly immediate performance impacts with this feature:

- The required disk space: FileMaker Server will need 16GB of disk space for this feature. 8GB of which it will claim immediately and pre-allocate for its logs. It will use an additional 8GB will if you need a data restoration, but Server cannot perform it immediately. In that case, it will save the old logs and start a new set. In a typical scenario, the encryption key for the hosted files has not been saved on FileMaker Server and requires manual key entry by an administrator.

16GB of disk space is sizeable. It is not uncommon for server’s to be configured with less than the recommended disk space, especially in virtualized environments. You must consider the extra required disk space for this feature iwhen upgrading. The extra disk space consumption may severely affect the server’s performance if it eats up much of the currently available disk space.

- The required disk activity: if your solution creates, deletes, or edits many records, it will expend more resources on writing transactions to its log before applying them to the database. If your machine has marginal specs (slow disk i/o, slow processors, low free disk space, etc.), you’ll certainly feel this effect. Consider your current server’s specs when deciding to upgrade, and favor high-speed disks when deciding to spend money on upgraded hardware.

Logs

The question has already come up in the community: “Can we use those transaction logs?” The answer is: no. These logs are strictly for FileMaker Server’s data restoration. If you need transactions in your scripted workflow, you still need to code to that using the existing techniques. You cannot use these transaction logs for this.

Backup Strategy / Disaster Recovery Strategy / Business Continuity

Where does the new feature fit in with the other tools that help us with a backup and disaster recovery strategy?

It complements the existing tools; it does not replace any of them, but they all fit together like this:

- Data Restoration gives you just one restore point: the database as it was at the time of the crash. The restore time is fast and pretty much automatic at the moment FileMaker Server starts again

- Progressive backups give you two restore points that you can rely on in case the Data Restoration fails. Their age depends on the interval you use, and that interval is typically relatively short (5-15 minutes). The restore time is usually fast but does involve copying the backup set over.

- Backup schedules give you as many restore points as you have configured (hourly, daily, weekly, etc.) Restore time is the same as with progressive backups unless you need to retrieve a backup from an off-machine / off-site location.

Just like the Progressive backups, Data Restoration does not give you an off-machine or off-site backup. For that, you need to work with the regular backup schedules combined with some OS-level scripting.

Test

If you are going to rely on this feature as part of your overall backup or disaster recovery strategy, you must test it thoroughly and understand how it works.

Probably the first thing to test for is whether the data restoration is actively running. It may turn itself off at startup when it detects that there is not enough free disk space. You can use the Admin API to perform scheduled testing to verify that the feature is on, and you can also monitor the FileMaker Server event log for messages that the server failed to start the data restoration at startup. Our upcoming DevCon session on server monitoring with Zabbix will include this kind of functionality.

A second test to perform from time to time would be to crash the server and become familiar with the normal course of operation that a Data Restoration goes through. That way, you can verify that the data restoration took its normal course and spot anomalies when it does not.

Obviously, you would do this on a test machine with a backup of your solution. One of the challenges in testing features like this is generating enough relevant load so that the test is somewhat representative of a typical situation on your server. After all, you want to ensure you’ve engaged the transaction logging part of the server so that you have something in the logs to restore.

Our Beta Test Process

During beta testing, we completed the following:

- An AWS EC2 windows instance with FileMaker Server 18 installed

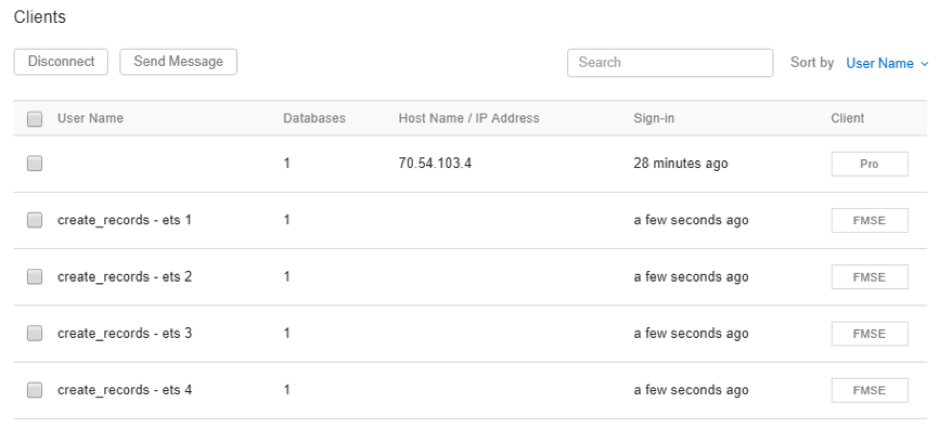

- A hosted file that creates records through PSoS, in a continuous loop, with the “wait for result” toggled off. This allows us to spawn as many of these as we want, generate a good load, and pretty much guarantee that FileMaker Server is writing to its transaction log when we induce a crash.

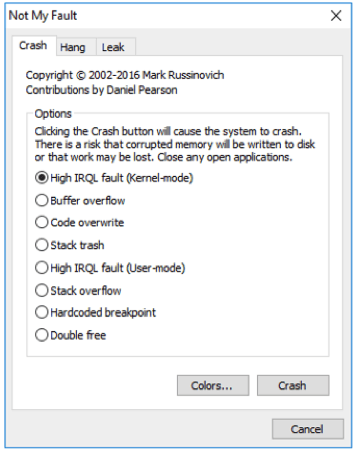

- The “Not My Fault” Microsoft utility that allows you to crash Windows

With the server busy creating many records through the PSoS sessions:

I induce a crash with the Microsoft tool:

Post-Crash

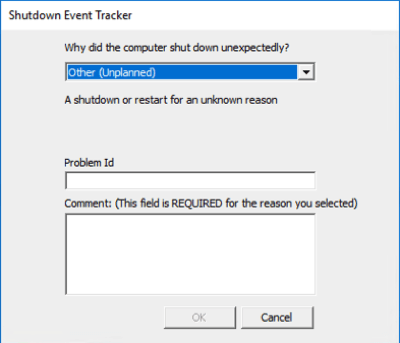

After the server recovers from the crash and reboots, let’s look for what happened. There is the obvious evidence of the crash with Windows prompting us to acknowledge the unexpected shutdown:

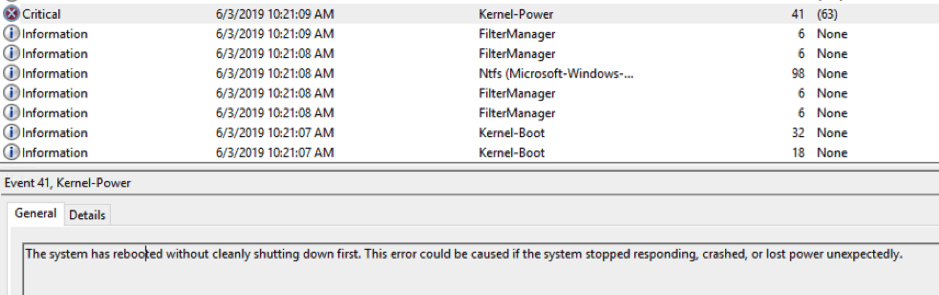

And an entry in the Windows System log, at the time Windows rebooted itself, confirming that the crash did indeed happen:

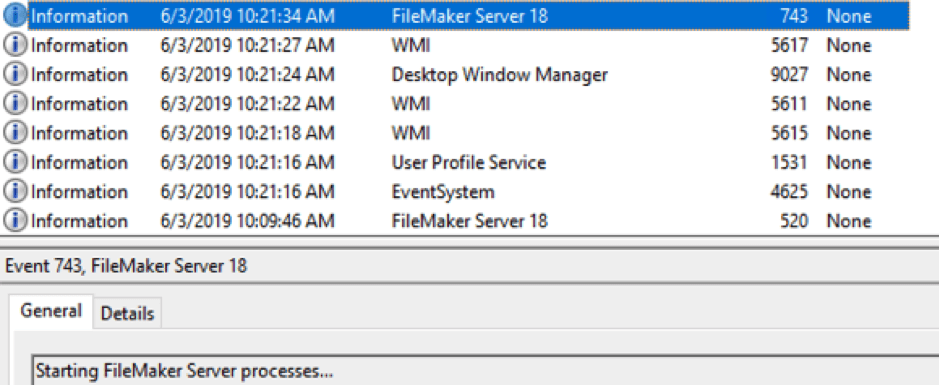

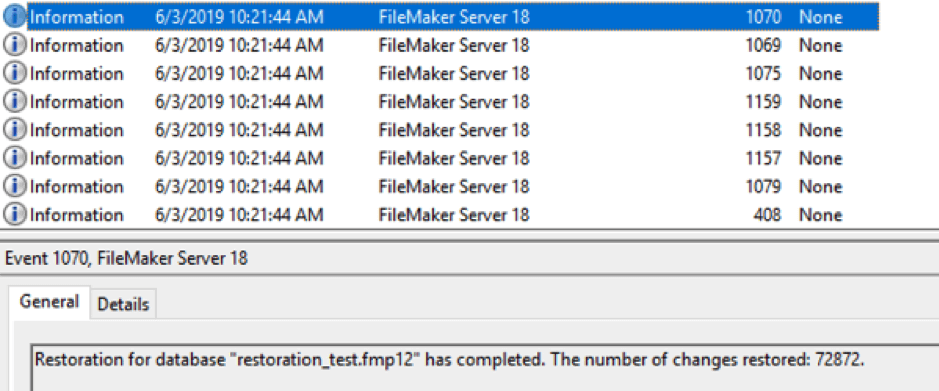

In the Application event log, where FileMaker Server also logs its events, we see FMS starting up at 10:21 am, at the time Windows restarted:

As per the best practices, I have FileMaker Server configured to start up but not auto-host any files. This allows administrators to inspect the sequence of events that brought down the machine before deciding to continue with the files as they are.

At this point in time, my FileMaker Server is operational, but no files are being hosted.

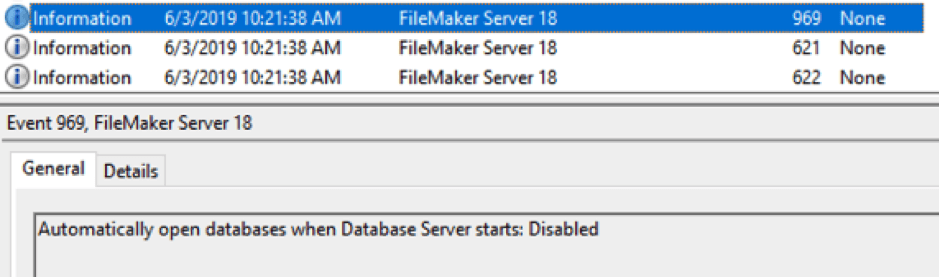

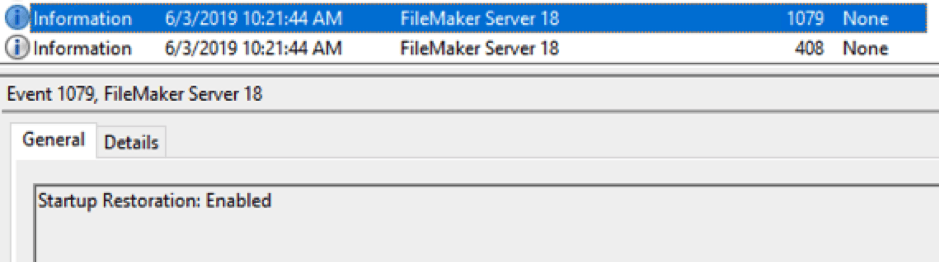

A little further in the event log, we have confirmation that the Data Restoration feature is enabled:

This is an important event to confirm because, without it, we could try to open the files, but there will be no attempt to restore them to their pre-crash state!

Restoration

The next event that we want to see is that the restoration has been applied and completed without error:

Without this event, we cannot continue to use this file, and we would need to revert to a progressive backup or a regular backup.

Note that FileMaker Server performed the data restoration when its processes started, not when the file was opened to be hosted. Given that we have auto-hosting disabled, at this point in time, the FileMaker file is not yet hosted and available to the users.

The only exception is if the file has Encryption-at-Rest (EAR) enabled and the encryption key is not saved. In this scenario, FileMaker Server will cannot do the data restoration at this point but will postpone it until the files are opened through the admin console and the administrator inputs the encryption key.

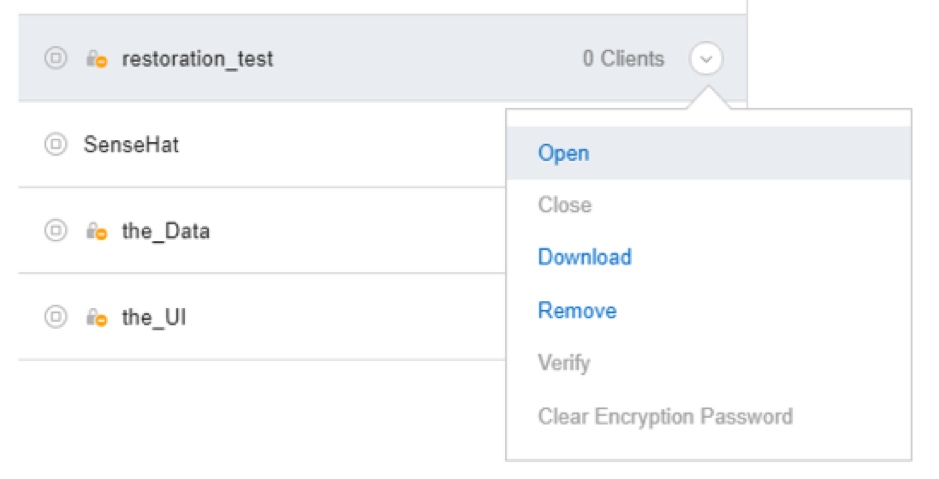

In our scenario, the data restoration has been done successfully, and we can proceed to open the file in the admin console:

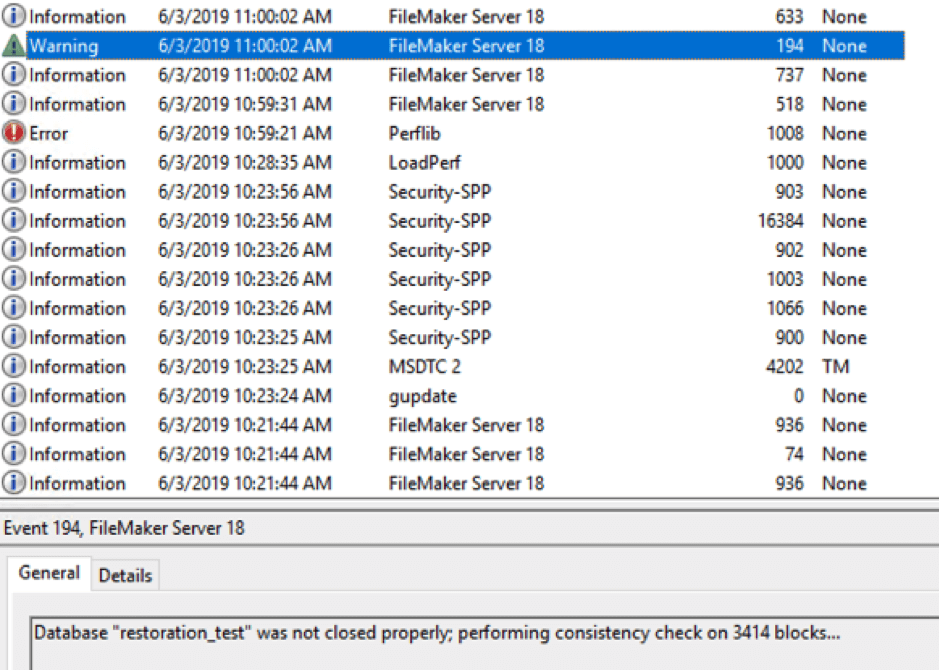

When we go back to the event log, we see FileMaker Server logged an “improperly closed” event when we opened the file:

This is normal, albeit somewhat unexpected and a bit confusing. Given that the data restoration was successful, we did not really expect FileMaker Server to log this warning. You must confirm the event ID 1070 (data restoration completed) preceding this warning event.

If unsuccessful, you’d see this warning about the file improperly closing. However, the file would not have been restored to its pre-crash state. Per best practice, you cannot safely re-use a file in that state.

In Conclusion

The new Data Restoration feature can play an important role in how we deal with crashes and outages.

Understanding its behavior is crucial; however, in making sure, we can rely on the outcome of a FileMaker Server restart:

- Is the data restoration feature enabled?

- Did the data restoration complete successfully?

As always feel free to leave questions and comments.

Excellent walk-through Wim, thanks for providing it.

hi Wim,

Great article thanks for sharing. I’m curious to understand why there’s no mention in the release of FileMaker Server 18 about multi-core utilisation and the improved performance that was mentioned during the ETS programme. I am under the assumption startup restoration is a feature added as a necessity due to the increased number of threads FileMaker Server can utilise, leading to more potential for corruption?

Has the introduction of the transaction log and restoration option essentially negated any performance benefit seen by utilising more cores, and as a result is this why there was no mention on release of better performance (because there actually is none?) Our own tests with restoration on suggest 18 is slower than 17 when there’s over 3/4 users doing something at once – not what we wanted to see

Hi Daniel,

Thanks!

From my testing, the data restoration has not negated the potential performance benefits from better parallel processing. Not sure if you saw the test results from our “Punisher” tool that was discussed during ETS; it demonstrates that some things are faster and some are slower and much of that depends on the nature, design, concurrent load and hardware specs… in other words: there’s an infinite number of variables in this equation and the outcome on any given deployment can be hard to predict. It’s equally clear that the larger the load and the better the hardware, the bigger the positive impact of the better parallel processing. Conversely, a big load on sub-par hardware may see an overall performance hit depending on what the solution does and how it is designed. Long and short: given how many things affect the performance, I’m not surprised that FMI is showing restraint. Things will become a lot cleared as everyone gets more experience with the features. We’re hoping to release that “Punisher” at some point and discuss its results.

Very good write up, Wim. The information on the new feature in FileMaker 18 – data restoration is explained in detail. The data restoration process after a crash is clear. This new feature will be helpful and it may help to restore data successfully. Thanks for sharing this information with all.